I Didn't Know That!: Top 4 Deepseek of the decade

본문

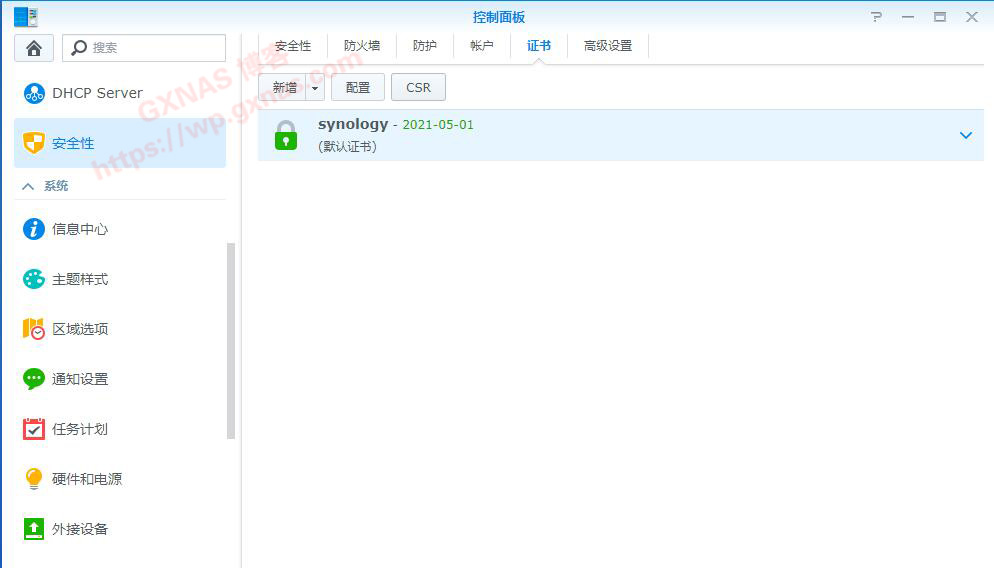

China's 'DeepSeek' confirms U.S. Recently, commenting on TikTok, Trump downplayed its potential threats posed to U.S. DeepSeek’s potential ties to the Chinese government are prompting rising alarms in the U.S. As DeepSeek use increases, some are concerned its models' stringent Chinese guardrails and systemic biases could possibly be embedded across all kinds of infrastructure. Therefore, our workforce set out to analyze whether or not we might use Binoculars to detect AI-written code, and what components may impact its classification efficiency. Therefore, the developments of exterior firms comparable to DeepSeek are broadly a part of Apple's continued involvement in AI analysis. "How are these two firms now opponents? ???? DeepSeek-R1 is now live and open source, rivaling OpenAI's Model o1. A notable characteristic of the Deepseek-R1 mannequin is that it explicitly reveals its reasoning process inside the tags included in response to a immediate. Parameters form how a neural network can transform input -- the immediate you sort -- into generated text or images.

China's 'DeepSeek' confirms U.S. Recently, commenting on TikTok, Trump downplayed its potential threats posed to U.S. DeepSeek’s potential ties to the Chinese government are prompting rising alarms in the U.S. As DeepSeek use increases, some are concerned its models' stringent Chinese guardrails and systemic biases could possibly be embedded across all kinds of infrastructure. Therefore, our workforce set out to analyze whether or not we might use Binoculars to detect AI-written code, and what components may impact its classification efficiency. Therefore, the developments of exterior firms comparable to DeepSeek are broadly a part of Apple's continued involvement in AI analysis. "How are these two firms now opponents? ???? DeepSeek-R1 is now live and open source, rivaling OpenAI's Model o1. A notable characteristic of the Deepseek-R1 mannequin is that it explicitly reveals its reasoning process inside the tags included in response to a immediate. Parameters form how a neural network can transform input -- the immediate you sort -- into generated text or images.

The research suggests you can totally quantify sparsity as the share of all of the neural weights you'll be able to shut down, with that share approaching but by no means equaling 100% of the neural net being "inactive". On this first demonstration, The AI Scientist conducts research in numerous subfields within machine studying research, discovering novel contributions in well-liked areas, corresponding to diffusion models, transformers, and grokking. Tensorgrad is a tensor & deep learning framework. The identical economic rule of thumb has been true for each new era of personal computers: either a greater outcome for a similar money or the identical consequence for much less money. That discovering explains how DeepSeek could have less computing energy but attain the same or higher outcomes just by shutting off more network components. Apple AI researchers, in a report published Jan. 21, explained how DeepSeek and comparable approaches use sparsity to get higher results for a given quantity of computing energy.

Graphs present that for a given neural internet, on a given computing budget, there's an optimum amount of the neural web that may be turned off to succeed in a level of accuracy. Abnar and the staff ask whether or not there's an "optimum" stage for sparsity in DeepSeek and similar fashions: for a given quantity of computing power, is there an optimal number of those neural weights to activate or off? Our research investments have enabled us to push the boundaries of what’s possible on Windows even further at the system level and at a model degree resulting in innovations like Phi Silica. Even when the individual brokers are validated, does that imply they're validated together? They don't seem to be meant for mass public consumption (although you're Free Deepseek Online chat to learn/cite), as I'll only be noting down data that I care about. Amazon needs you to succeed, and you'll find considerable help there.

It’s just like, say, the GPT-2 days, when there have been kind of preliminary signs of systems that might do some translation, some query and answering, some summarization, but they weren't super reliable. Right now, for even the neatest AI to recognize, say, a cease signal, it has to possess knowledge on each conceivable visual angle, from any distance, and in each attainable light. As Abnar and workforce said in technical terms: "Increasing sparsity whereas proportionally expanding the overall variety of parameters constantly leads to a lower pretraining loss, even when constrained by a hard and fast training compute budget." The time period "pretraining loss" is the AI term for the way accurate a neural net is. The synthetic intelligence (AI) market -- and your complete stock market -- was rocked final month by the sudden popularity of DeepSeek, the open-source large language mannequin (LLM) developed by a China-primarily based hedge fund that has bested OpenAI's best on some duties whereas costing far much less.

It’s just like, say, the GPT-2 days, when there have been kind of preliminary signs of systems that might do some translation, some query and answering, some summarization, but they weren't super reliable. Right now, for even the neatest AI to recognize, say, a cease signal, it has to possess knowledge on each conceivable visual angle, from any distance, and in each attainable light. As Abnar and workforce said in technical terms: "Increasing sparsity whereas proportionally expanding the overall variety of parameters constantly leads to a lower pretraining loss, even when constrained by a hard and fast training compute budget." The time period "pretraining loss" is the AI term for the way accurate a neural net is. The synthetic intelligence (AI) market -- and your complete stock market -- was rocked final month by the sudden popularity of DeepSeek, the open-source large language mannequin (LLM) developed by a China-primarily based hedge fund that has bested OpenAI's best on some duties whereas costing far much less.

In the event you cherished this informative article as well as you would want to get more details about Deepseek AI Online chat generously go to our own site.

댓글목록0

댓글 포인트 안내